MIT Researchers Develop Brain-Inspired AI Model for Long-Sequence Prediction

Share

A team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has introduced a new artificial intelligence model inspired by neural dynamics in the brain, aiming to revolutionize how machine learning systems process complex, long-range data.

The model—called LinOSS (Linear Oscillatory State-Space Model)—was developed by researchers T. Konstantin Rusch and CSAIL Director Daniela Rus. By drawing on principles of forced harmonic oscillators, a concept rooted in physics and observed in biological neural networks, LinOSS offers a stable and computationally efficient approach to understanding sequences that span hundreds of thousands of data points.

Cracking the Long-Sequence Challenge

AI models have long struggled with learning patterns in time series or sequential data—particularly when those sequences stretch over long periods, as seen in climate modeling, biosignal monitoring, and financial trend forecasting. State-space models (SSMs) have emerged as a promising tool to address this, but many existing variants suffer from instability or high computational costs when scaled up.

LinOSS addresses these limitations head-on.

“Our goal was to capture the stability and efficiency seen in biological neural systems and translate these principles into a machine learning framework,” said Rusch.

Unlike earlier SSMs, which impose restrictive conditions on model design to ensure reliable predictions, LinOSS introduces a more flexible architecture. The result is a system capable of modeling long-range interactions with both stability and speed—crucial for applications requiring precise forecasting or classification over extended sequences.

Mathematical Rigor Meets Practical Power

In addition to its biological inspiration, LinOSS is grounded in rigorous mathematics. The researchers proved the model’s universal approximation capability, showing that it can approximate any continuous, causal function between input and output sequences—a major theoretical milestone for sequence-based AI.

In head-to-head benchmarking, LinOSS outperformed leading models across a variety of tasks. It beat the widely used Mamba model by nearly 2x in accuracy and efficiency for extremely long sequences—positioning LinOSS as a new standard for sequence modeling.

The significance of the work has already been recognized: it earned a rare oral presentation slot at ICLR 2025, an honor reserved for the top 1% of submissions to one of the most competitive machine learning conferences in the world.

Broad Applications Across Science and Industry

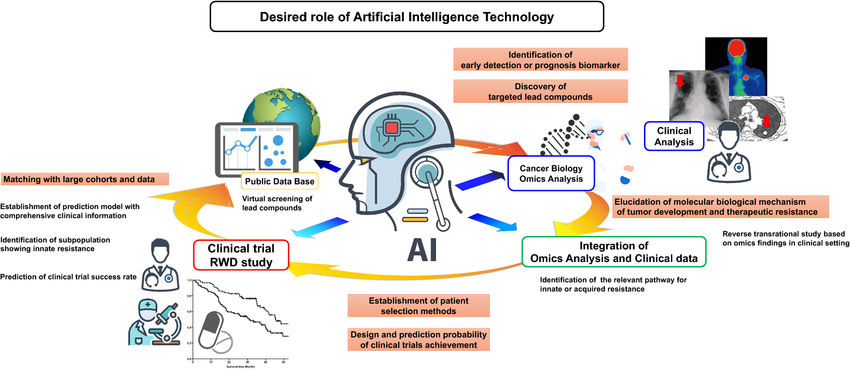

The implications of LinOSS are vast. According to the CSAIL team, it could benefit any field that relies on long-horizon forecasting or sequential data classification. Potential use cases include:

- Healthcare analytics: Accurate patient monitoring over long durations

- Climate science: Modeling long-term environmental changes

- Autonomous driving: Interpreting sensor data over time

- Finance: Detecting and forecasting complex market patterns

“This work exemplifies how mathematical rigor can lead to performance breakthroughs and broad applications,” said Rus. “With LinOSS, we’re providing the scientific community with a powerful tool for understanding and predicting complex systems, bridging the gap between biological inspiration and computational innovation.”

Future Directions and Neuroscientific Potential

The MIT researchers plan to expand LinOSS to cover new data modalities and further explore its applications in scientific domains. Interestingly, they believe that LinOSS may also shed light on the workings of the human brain, given its roots in biological neural dynamics.

The project was supported by the Swiss National Science Foundation, the Schmidt AI2050 initiative, and the U.S. Department of the Air Force Artificial Intelligence Accelerator.